Ilya Sutskever: A Pioneer in the Era of Generative AI

About this keynote: This keynote introduces Ilya Sutskever, one of the most influential researchers in the era of Generative AI, from his early days at the University of Toronto to co-founding OpenAI and Safe Superintelligence Inc.

Introduction

Ilya Sutskever stands as one of the most influential figures in modern artificial intelligence. His contributions span from foundational breakthroughs in computer vision with AlexNet to the development of sequence-to-sequence learning, and from the creation of the GPT series to pioneering work on AI alignment and superintelligence.

Connect with Ilya

Bio & Links

Google Scholar

Twitter/X

OpenAI

Safe Superintelligence Inc.

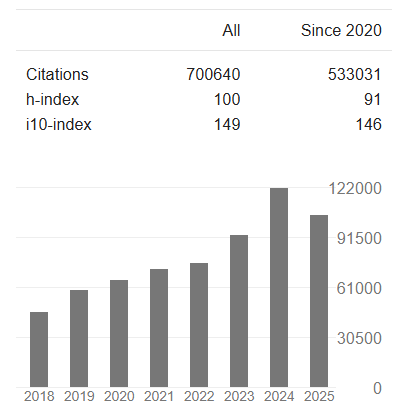

Ilya Sutskever's Google Scholar profile showcasing his tremendous impact on the field

Education and Career Timeline

Ilya’s journey from a graduate student to one of AI’s most influential leaders:

| Year | Milestone |

|---|---|

| 2002 | Moved to Canada, enrolled at University of Toronto |

| 2005 | B.Sc. in Mathematics, University of Toronto |

| 2007 | M.Sc. in Computer Science, University of Toronto |

| 2013 | Ph.D. in Computer Science under Geoffrey Hinton, University of Toronto |

| 2013 | DNNResearch acquired by Google; joined Google Brain as Research Scientist |

| 2015 | Left Google; co-founded OpenAI, served as Chief Scientist |

| May 2024 | Departed OpenAI to pursue new project |

| June 2024 | Co-founded Safe Superintelligence Inc. (SSI) with Daniel Gross and Daniel Levy |

| July 2025 | Became CEO of SSI after Daniel Gross departed to Meta Platforms |

Early Days: The Toronto Machine Learning Group

Ilya’s foundational years were spent in the Machine Learning group at the University of Toronto, working under the mentorship of the legendary Geoffrey Hinton. This period laid the groundwork for the deep learning revolution that would transform AI.

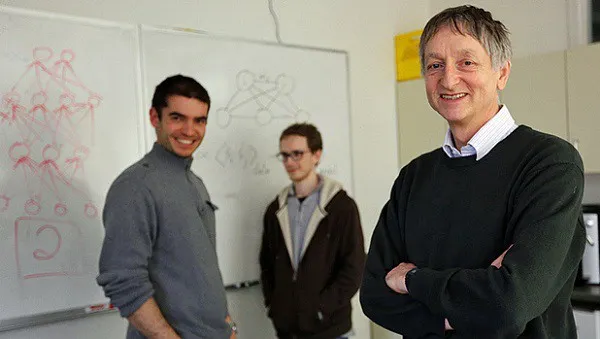

The legendary trio: Ilya Sutskever, Alex Krizhevsky, and Geoffrey Hinton (2012)

This photo captures a pivotal moment in AI history—three researchers who would change the field forever.

ImageNet Competition 2012: AlexNet Revolution

In 2012, AlexNet shattered expectations at the ImageNet competition, demonstrating the power of deep convolutional neural networks and GPU acceleration. This watershed moment marked the beginning of the deep learning era.

Competition Results

| Model | Top-1 (val) | Top-5 (val) | Top-5 (test) |

|---|---|---|---|

| (2nd Model) | / | / | 26.2% |

| AlexNet | 36.7% | 15.4% | 15.3% |

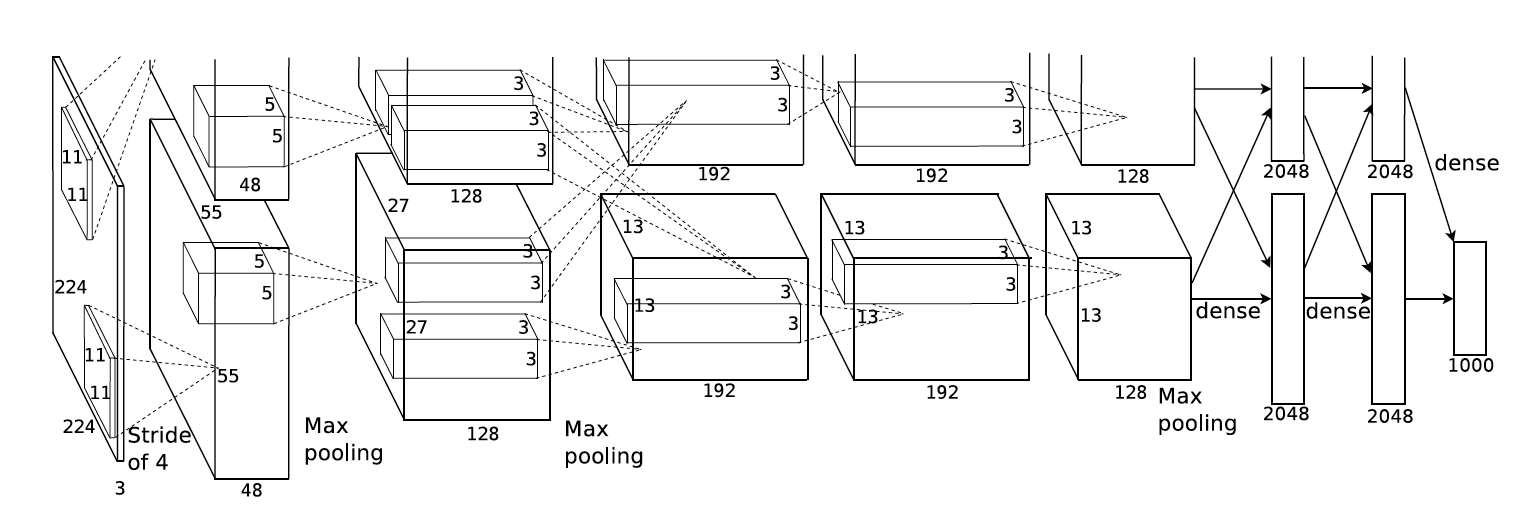

AlexNet architecture: A breakthrough in deep convolutional neural networks

The dramatic improvement over second place (15.3% vs 26.2% top-5 error) proved that deep learning was not just an incremental improvement but a paradigm shift in computer vision.

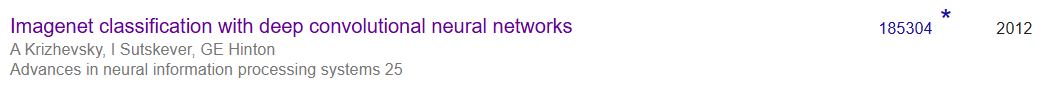

AlexNet's citation impact demonstrates its foundational role in modern AI

Citation:

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. In Neural Information Processing Systems (NIPS).

PhD Thesis: Training Recurrent Neural Networks

Ilya’s PhD thesis, titled “Training Recurrent Neural Networks” (2013), addressed one of the most challenging problems in deep learning: training RNNs on sequences with long-term dependencies. This work laid crucial groundwork for modern sequence modeling.

At the time, he was pioneering the use of RNNs for Natural Language Processing, developing techniques that would later become standard in the field.

Thesis:

Sutskever, I. (2013). Training Recurrent Neural Networks. PhD thesis, University of Toronto.

Sequence-to-Sequence Learning: Foundation of Modern LLMs

One of Ilya’s most influential contributions came with the introduction of Sequence-to-Sequence (Seq2Seq) modeling. This foundational work, which used LSTMs (Long Short-Term Memory networks) to map input sequences to output sequences, laid the conceptual groundwork for all modern large language models.

Key Insight

The Seq2Seq model introduced the paradigm of encoding an input sequence into a fixed-size representation and then decoding it into an output sequence. This elegant approach would become the foundation of transformer architectures and LLMs.

Interestingly, Ilya later referred to LSTMs as a “horizontally displaced version of ResNet,” showing the deep connections between seemingly different architectural innovations.

The Seq2Seq paper's citation impact reflects its foundational role in NLP

Recognition

This work received the NeurIPS Test of Time Award 2024, recognizing its lasting impact on the field. Watch Ilya’s award talk.

Citation:

Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to Sequence Learning with Neural Networks. In Advances in Neural Information Processing Systems (NIPS).

Working at Google (2013-2015)

Following the success of AlexNet, DNNResearch (the startup formed by Hinton, Krizhevsky, and Sutskever) was acquired by Google in 2013. At Google Brain, Ilya contributed to several groundbreaking projects:

- TensorFlow: The deep learning framework that would become the industry standard

- AlphaGo: The revolutionary system that defeated world champion Go players

These years at Google exposed Ilya to large-scale machine learning systems and reinforced his vision for artificial general intelligence.

Co-founding OpenAI and the GPT Series

In 2015, Ilya made the pivotal decision to leave Google and co-found OpenAI as its Chief Scientist. This marked the beginning of the GPT era, which would transform both AI research and society.

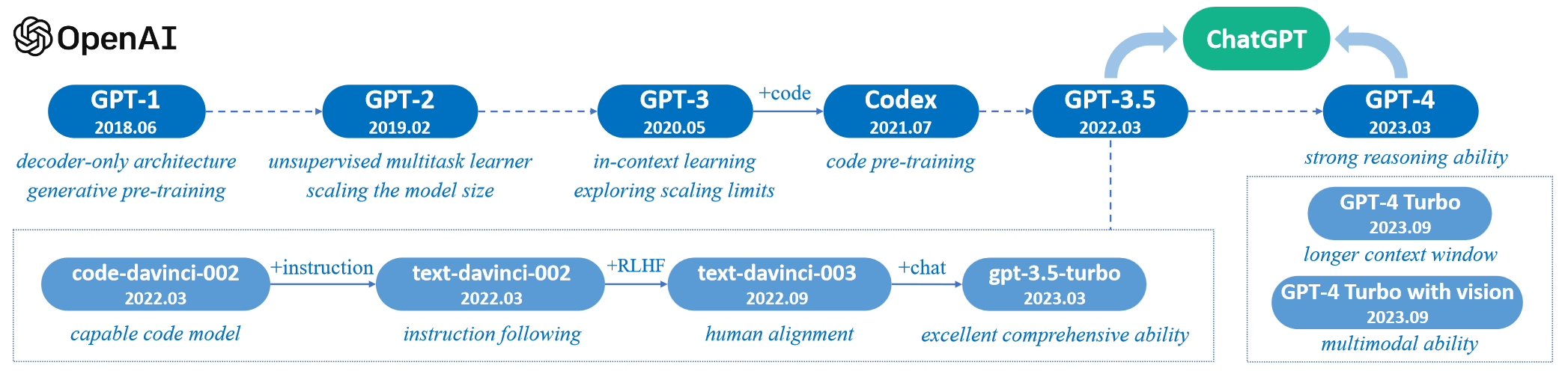

The evolution of the GPT series: From GPT-1 to GPT-4 and beyond

The GPT Journey

GPT-1 (2018): Improving Language Understanding by Generative Pre-Training

- Demonstrated that unsupervised pre-training followed by supervised fine-tuning could achieve strong performance across NLP tasks

- Introduced the transformer-based autoregressive language model paradigm

GPT-2 (2019): Language Models are Unsupervised Multitask Learners

- Showed that language models could perform multiple tasks without explicit supervision

- Famous for initially being considered “too dangerous to release” due to concerns about misuse

GPT-3 (2020): Language Models are Few-Shot Learners

- Scaled to 175 billion parameters

- Demonstrated remarkable few-shot learning capabilities

- Sparked the modern era of foundation models

InstructGPT (2022): Training Language Models to Follow Instructions with Human Feedback

- Introduced RLHF (Reinforcement Learning from Human Feedback) to align models with human preferences

- Set the stage for ChatGPT

GPT-4 (2023): GPT-4 Technical Report

- Multimodal capabilities (text and vision)

- Significant improvements in reasoning and reliability

- Powers many of today’s most advanced AI applications

GPT-4o (2024): Omnimodal AI

- Accepts any combination of text, audio, image, and video inputs

- Generates text, audio, and image outputs

- Real-time conversational capabilities with human-like response times

Other Groundbreaking Projects at OpenAI

Beyond GPT, Ilya led or contributed to several other transformative projects:

DALL-E Series

The DALL-E models brought text-to-image generation to mainstream attention:

- DALL-E (2021): Zero-shot text-to-image generation using transformers

- DALL-E 2 (2022): Hierarchical text-conditional image generation with CLIP latents (unCLIP)

- DALL-E 3 (2023): Improved prompt following through better image captioning

CLIP (2021)

Contrastive Language-Image Pre-training revolutionized vision-language understanding by learning aligned representations from 400 million image-text pairs scraped from the internet. CLIP enabled zero-shot transfer to numerous vision tasks and became a foundational model for multimodal AI.

Other Notable Works

- Codex (2021): GPT fine-tuned on code, powering GitHub Copilot

- GLIDE (2022): Photorealistic image generation with text-guided diffusion

- iGPT (2020): Generative pre-training from pixels

Alignment and Superintelligence

As AI systems became more powerful, Ilya increasingly focused on the critical challenge of alignment—ensuring that advanced AI systems remain beneficial and aligned with human values.

Superalignment at OpenAI

In July 2023, OpenAI announced the Superalignment project, co-led by Ilya Sutskever and Jan Leike, dedicating 20% of OpenAI’s compute to solving the problem of aligning superhuman AI systems.

The Alignment Challenge

As AI systems become more capable than humans, how can we ensure they remain aligned with human values when humans may not be capable of evaluating their behavior? This is the central challenge of superalignment.

Key Research:

- Weak-to-Strong Generalization (Burns et al., 2024): Can weak human supervision elicit the full capabilities of much stronger models?

- Governance of Superintelligence (2023): Policy frameworks for managing risks from advanced AI

Safe Superintelligence Inc. (SSI)

In June 2024, after departing OpenAI, Ilya co-founded Safe Superintelligence Inc. with Daniel Gross and Daniel Levy. SSI’s mission is singular: build safe superintelligence.

“We will pursue safe superintelligence in a straight shot, with one focus, one goal, and one product.” — SSI Mission Statement

In July 2025, Ilya became CEO of SSI, taking on an even greater leadership role in shaping the future of safe AI development.

Influence on the Field

Leopold Aschenbrenner’s influential essay “Situational Awareness” (2024), which discusses the trajectory toward AGI and superintelligence, is dedicated to Ilya Sutskever—a testament to his profound impact on thinking about advanced AI.

Impact and Legacy

Ilya Sutskever’s contributions to AI are immeasurable:

Technical Innovations

- AlexNet: Sparked the deep learning revolution

- Seq2Seq: Foundation of modern sequence modeling and LLMs

- GPT Series: Transformed how we interact with AI

- CLIP & DALL-E: Pioneered multimodal AI

Research Philosophy

- Emphasis on scale and compute as drivers of capability

- Focus on unsupervised learning and emergent capabilities

- Early recognition of the importance of alignment

Leadership

- Chief Scientist of OpenAI (2015-2024)

- Co-founder and CEO of Safe Superintelligence Inc. (2024-present)

- Mentor to a generation of AI researchers

Conclusion

From his early days working with Geoffrey Hinton in Toronto to leading the charge toward safe superintelligence, Ilya Sutskever has been at the forefront of every major breakthrough in modern AI. His unique combination of technical brilliance, research vision, and concern for AI safety makes him one of the most important figures shaping the future of artificial intelligence.

As we stand on the cusp of even more transformative AI capabilities, Ilya’s work on alignment and his commitment to building safe superintelligence may prove to be his most important contribution yet.

References

Key Papers

-

Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. In Neural Information Processing Systems.

-

Sutskever, I., Vinyals, O., & Le, Q. V. (2014). Sequence to Sequence Learning with Neural Networks. In Advances in Neural Information Processing Systems.

-

Radford, A., Narasimhan, K., et al. (2018). Improving Language Understanding by Generative Pre-Training. OpenAI.

-

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., & Sutskever, I. (2019). Language Models are Unsupervised Multitask Learners. OpenAI.

-

Brown, T. B., et al. (2020). Language Models are Few-Shot Learners. In Neural Information Processing Systems.

-

Ramesh, A., et al. (2021). Zero-Shot Text-to-Image Generation. In International Conference on Machine Learning.

-

Radford, A., et al. (2021). Learning Transferable Visual Models From Natural Language Supervision. In International Conference on Machine Learning.

-

Ouyang, L., et al. (2022). Training Language Models to Follow Instructions with Human Feedback. arXiv preprint arXiv:2203.02155.

-

OpenAI (2023). GPT-4 Technical Report. arXiv preprint arXiv:2303.08774.

-

Burns, C., et al. (2024). Weak-to-Strong Generalization: Eliciting Strong Capabilities With Weak Supervision. In International Conference on Machine Learning.

Additional Resources

- OpenAI Blog: Introducing Superalignment

- OpenAI Blog: Governance of Superintelligence

- Aschenbrenner, L. (2024). Situational Awareness. Essay.

For a complete bibliography including all cited works, please refer to the PDF version of this keynote.

Part of the "People of GenAI" Series

This keynote is part of a series introducing the key researchers shaping the era of Generative AI.

Contact: bili_sakura@zju.edu.cn

© 2025 Sakura