About this keynote: This keynote provides an overview of diffusion models for image synthesis, tracking the timeline of novel works and highlighting key contributions from OpenAI.

Timeline of Novel Works on Diffusion Models in OpenAI

OpenAI (Tim Brooks, Yang Song)

iGPT (2020) → ADM (2021) → DALL-E (2021) → GLIDE (2022) → DALL-E 2 (2022) → DALL-E 3 (2023) → Consistency Models (2023, 2024, 2025)

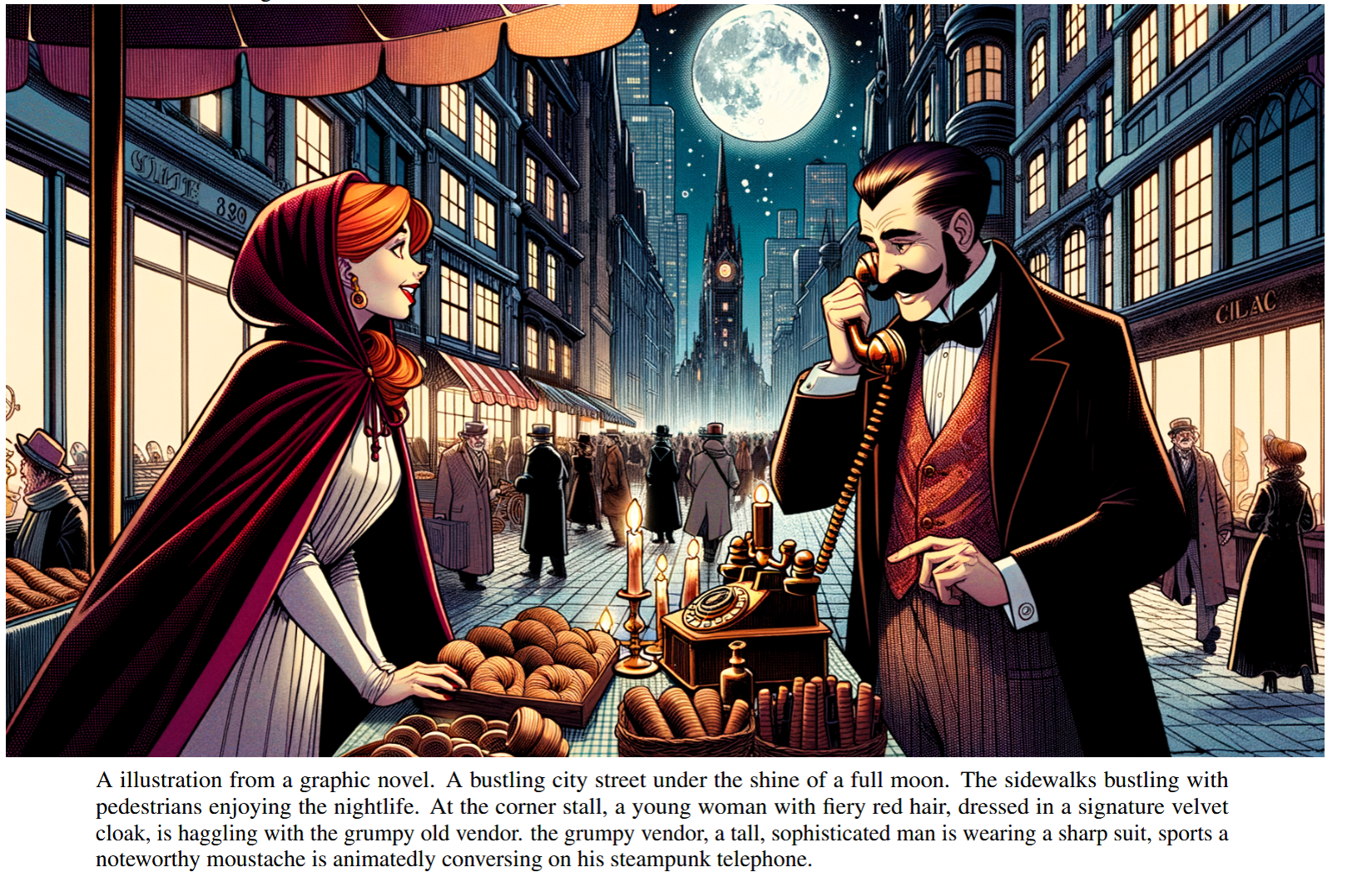

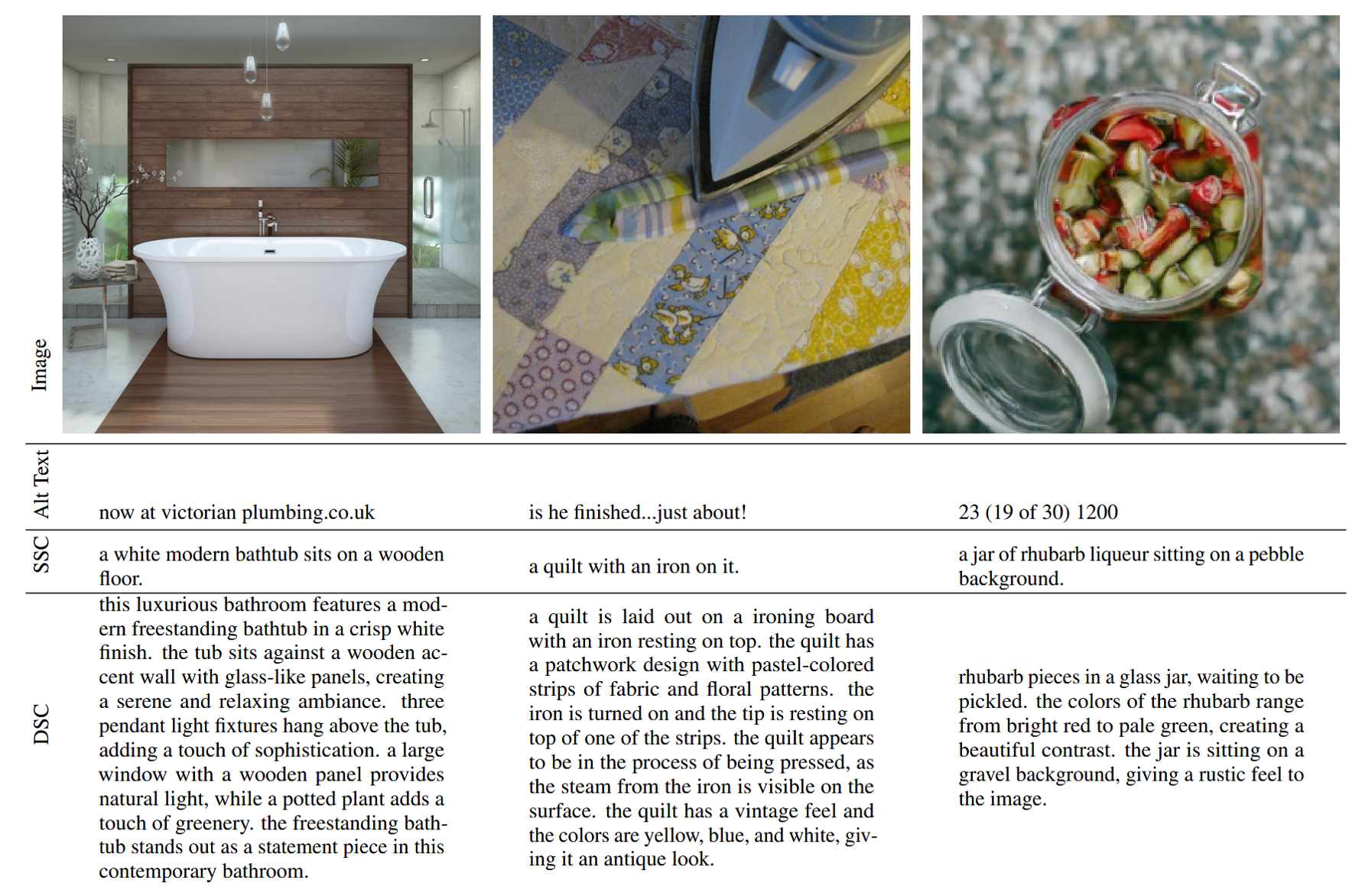

Broad Applications of Diffusion Models

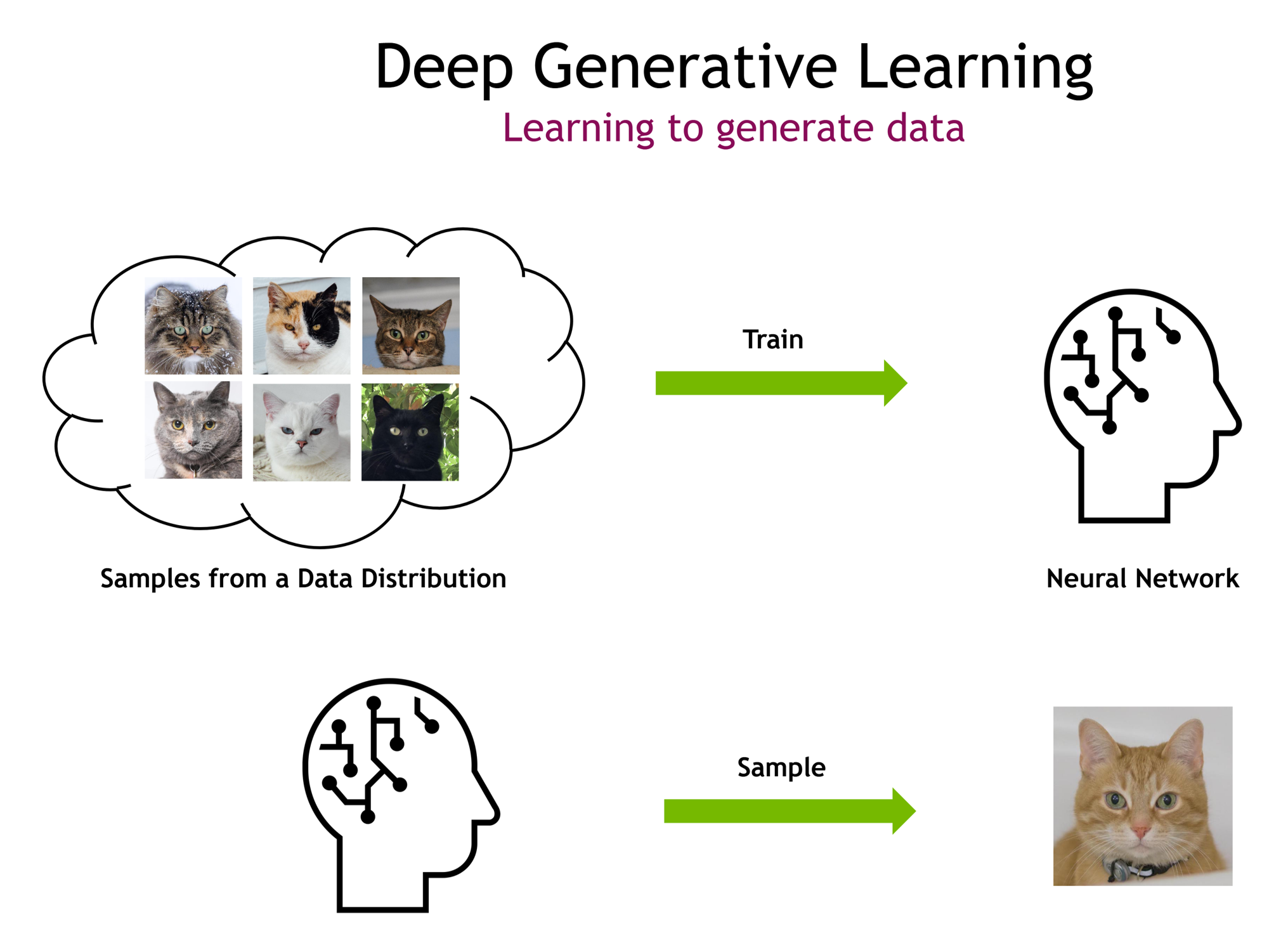

Deep Generative Learning

Learning to generate data

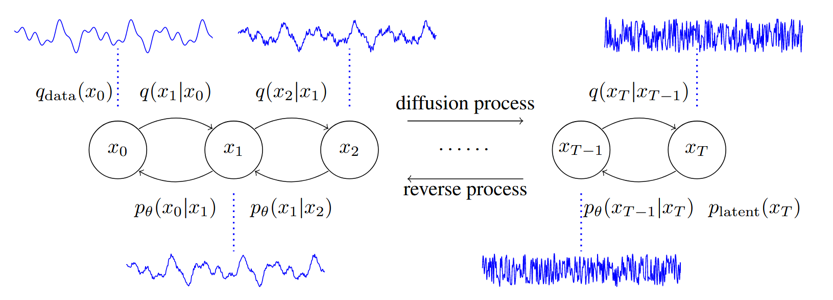

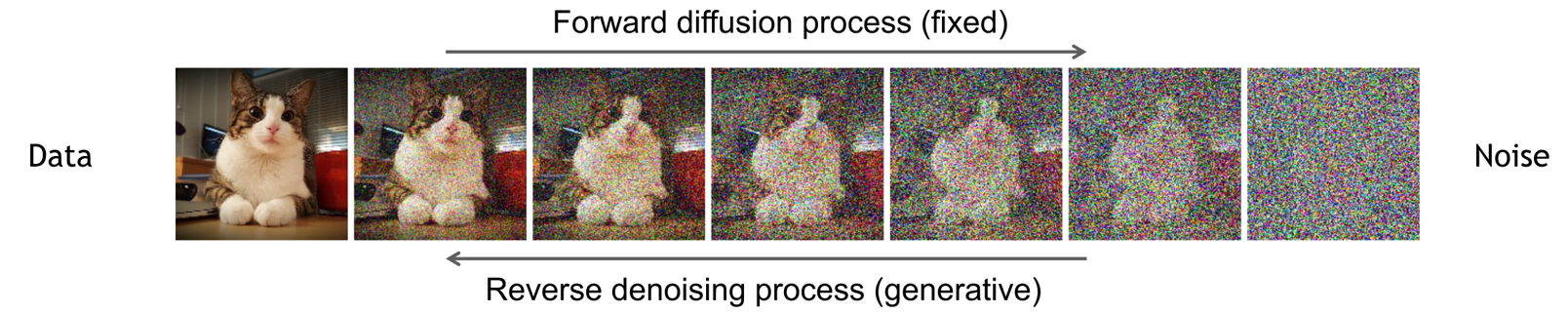

Source: CVPR 2023 Tutorial: Denoising Diffusion-Based Generative Modeling.

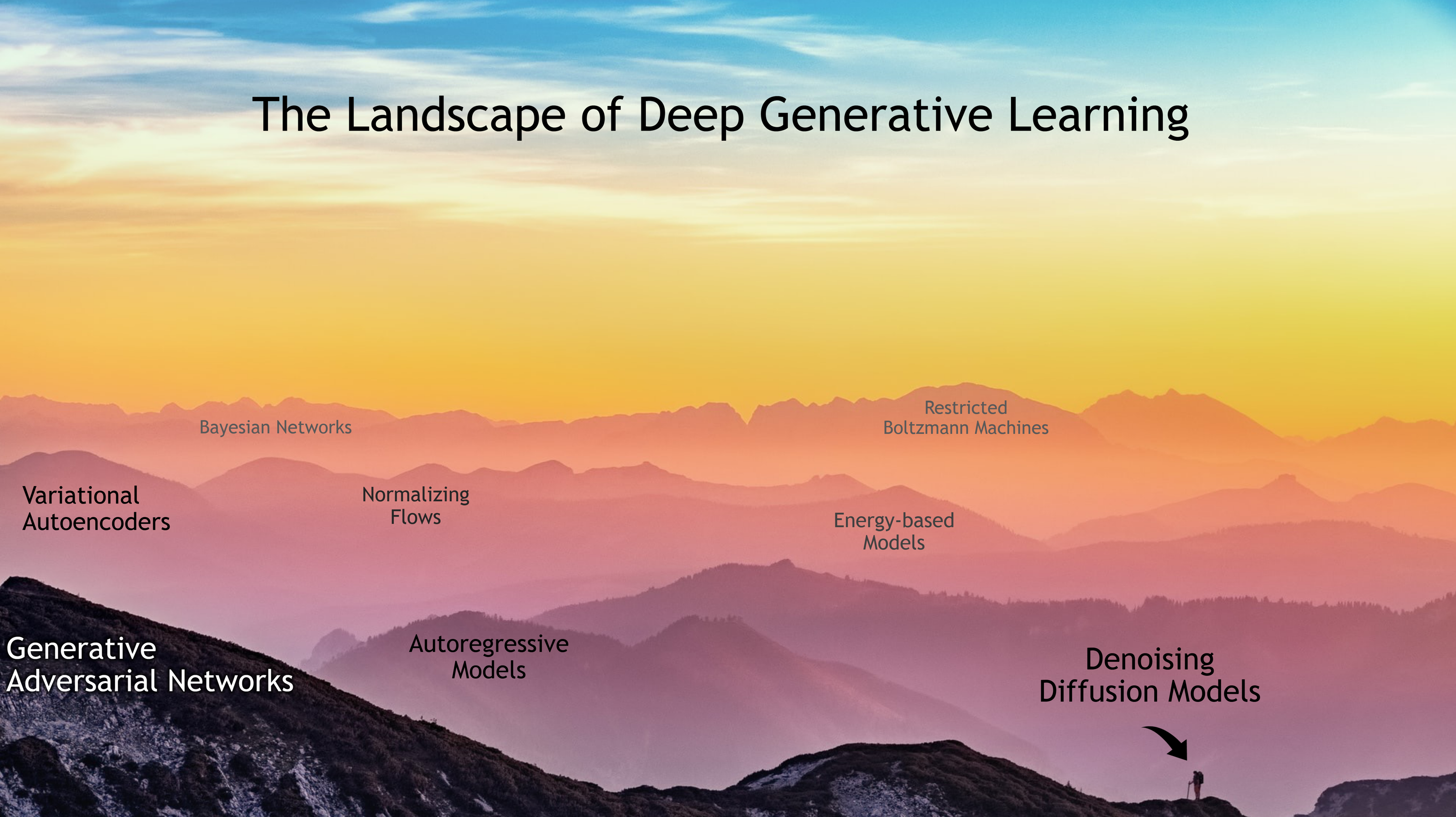

The Landscape of Deep Generative Learning

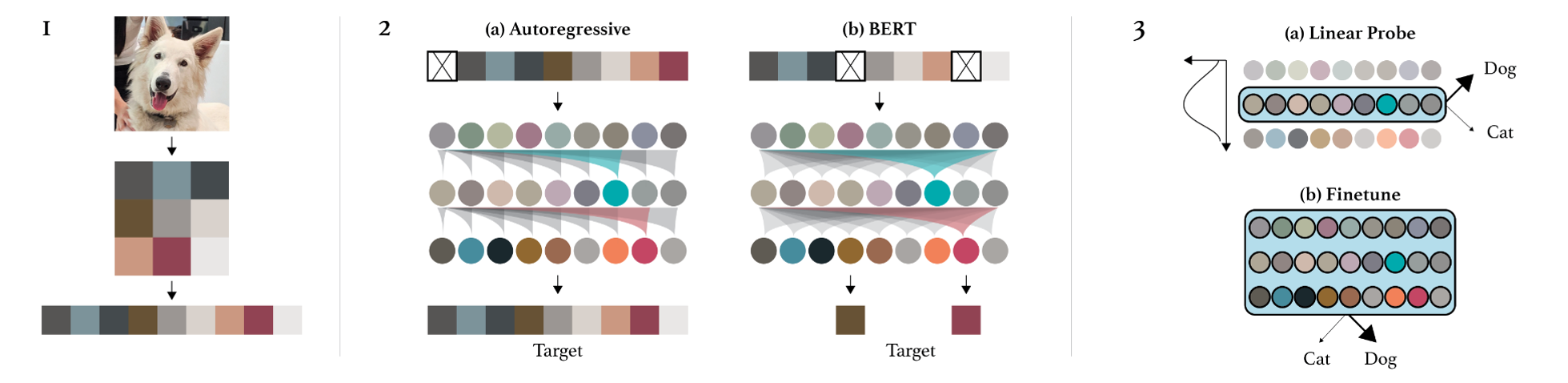

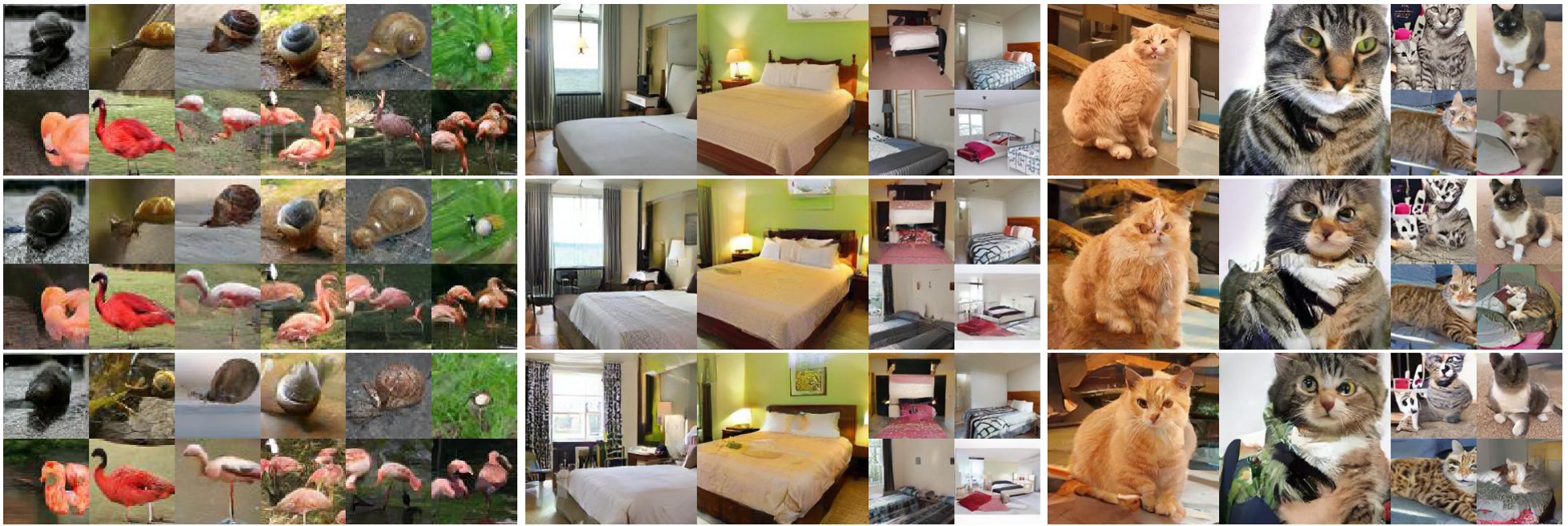

OpenAI - iGPT (Chen et al., 2020)

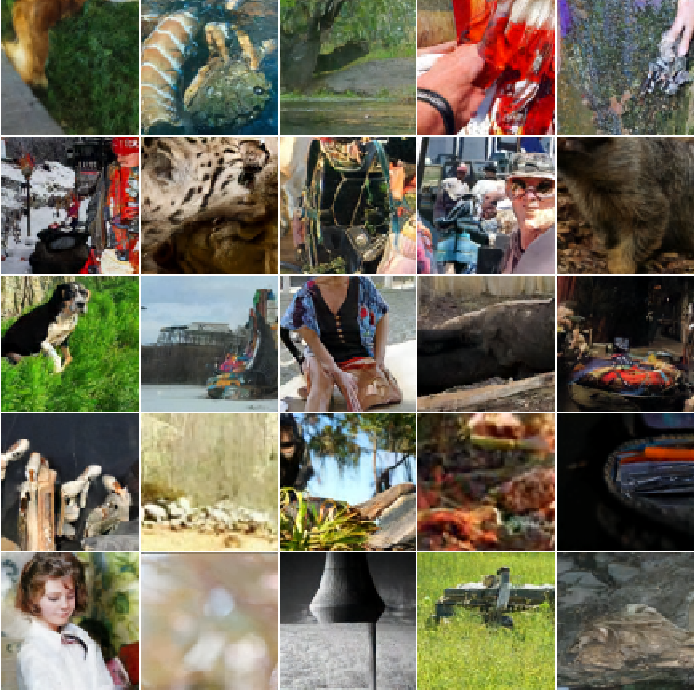

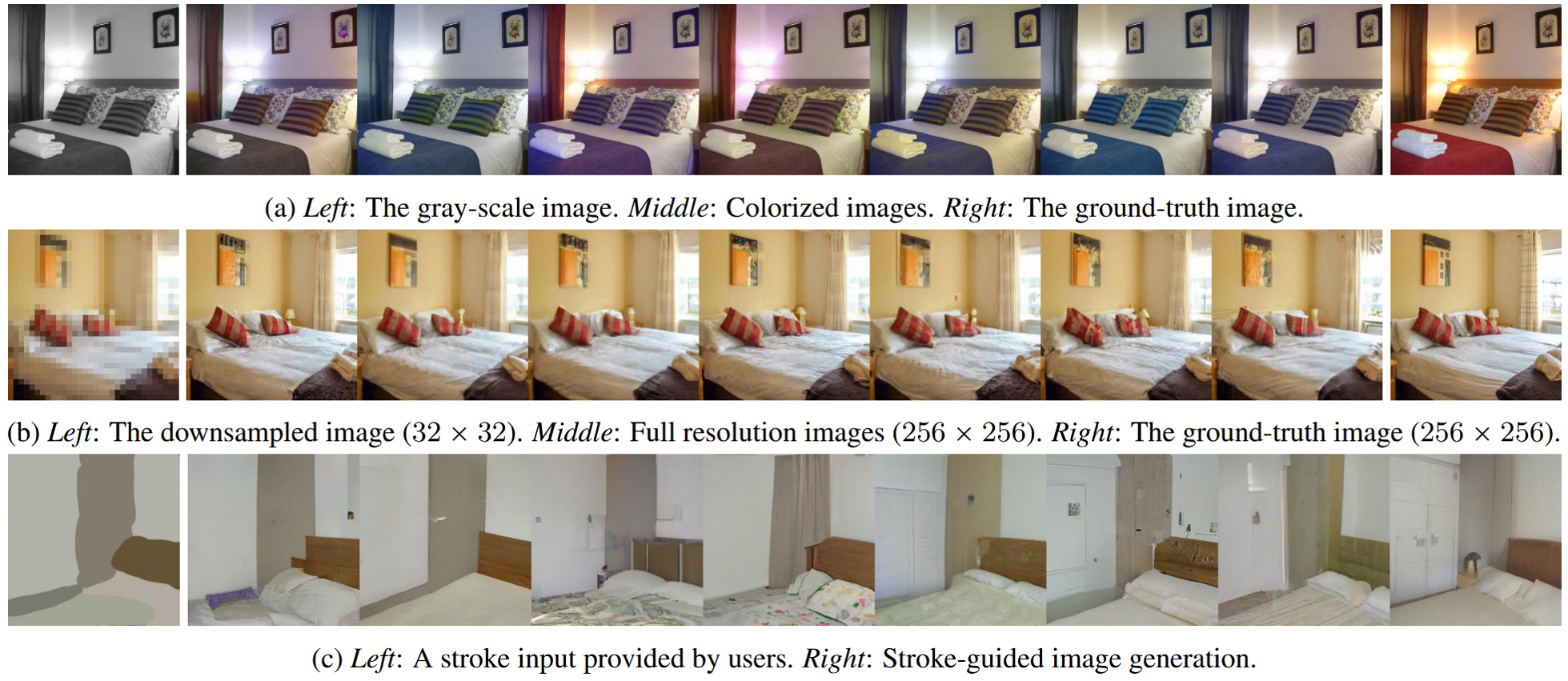

OpenAI - Improved DDPM (Nichol & Dhariwal, 2021)

| Iters | T | Schedule | Objective | NLL | FID |

|---|---|---|---|---|---|

| 200K | 1K | linear | Lsimple | 3.99 | 32.5 |

| 200K | 4K | linear | Lsimple | 3.77 | 31.3 |

| 200K | 4K | linear | Lhybrid | 3.66 | 32.2 |

| 200K | 4K | cosine | Lsimple | 3.68 | 27.0 |

| 200K | 4K | cosine | Lhybrid | 3.62 | 28.0 |

| 200K | 4K | cosine | Lvlb | 3.57 | 56.7 |

| 1.5M | 4K | cosine | Lhybrid | 3.57 | 19.2 |

| 1.5M | 4K | cosine | Lvlb | 3.53 | 40.1 |

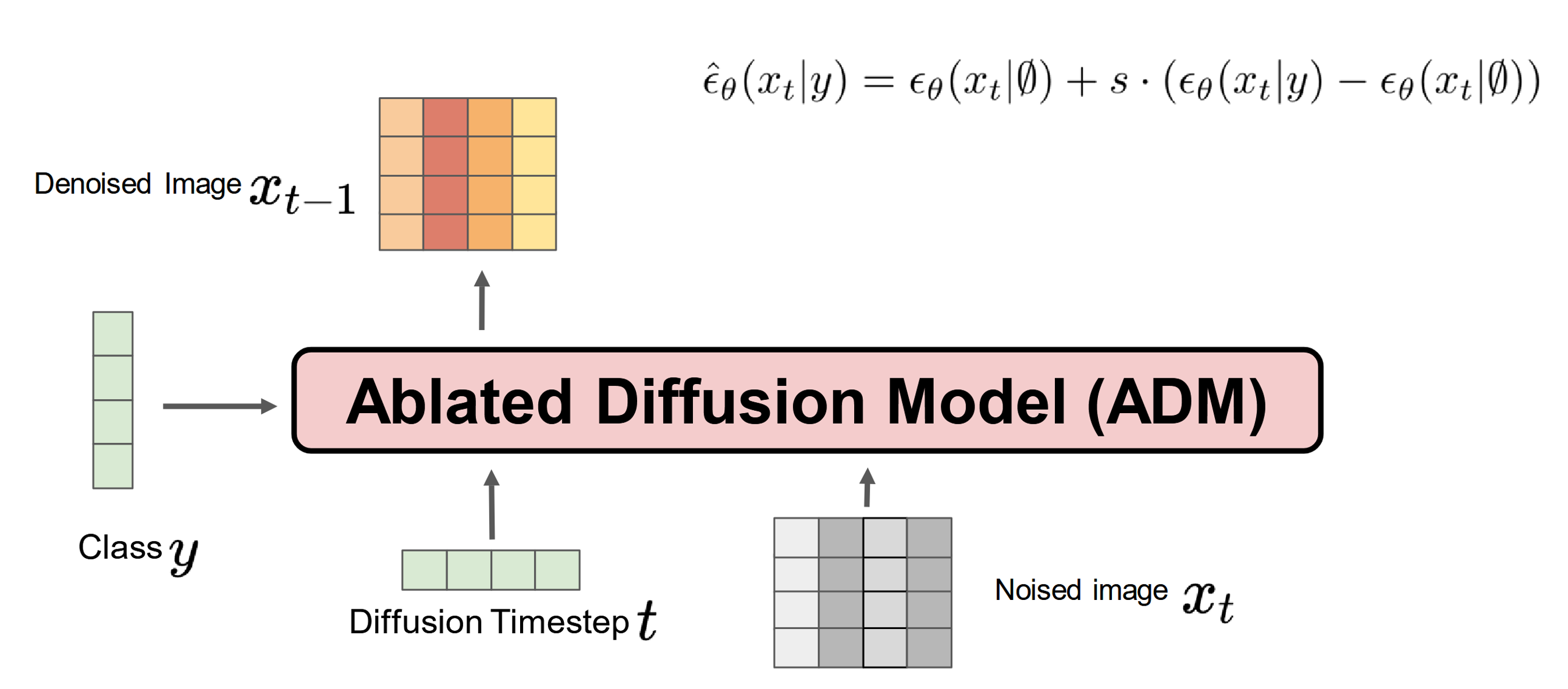

OpenAI - Ablated Diffusion Model (ADM) (Dhariwal & Nichol, 2021)

This slide is derived from https://www.crcv.ucf.edu/wp-content/uploads/2018/11/Group-6-Paper-2-Dalle-2.pdf.

Classifier Guidance (Dhariwal & Nichol, 2021)

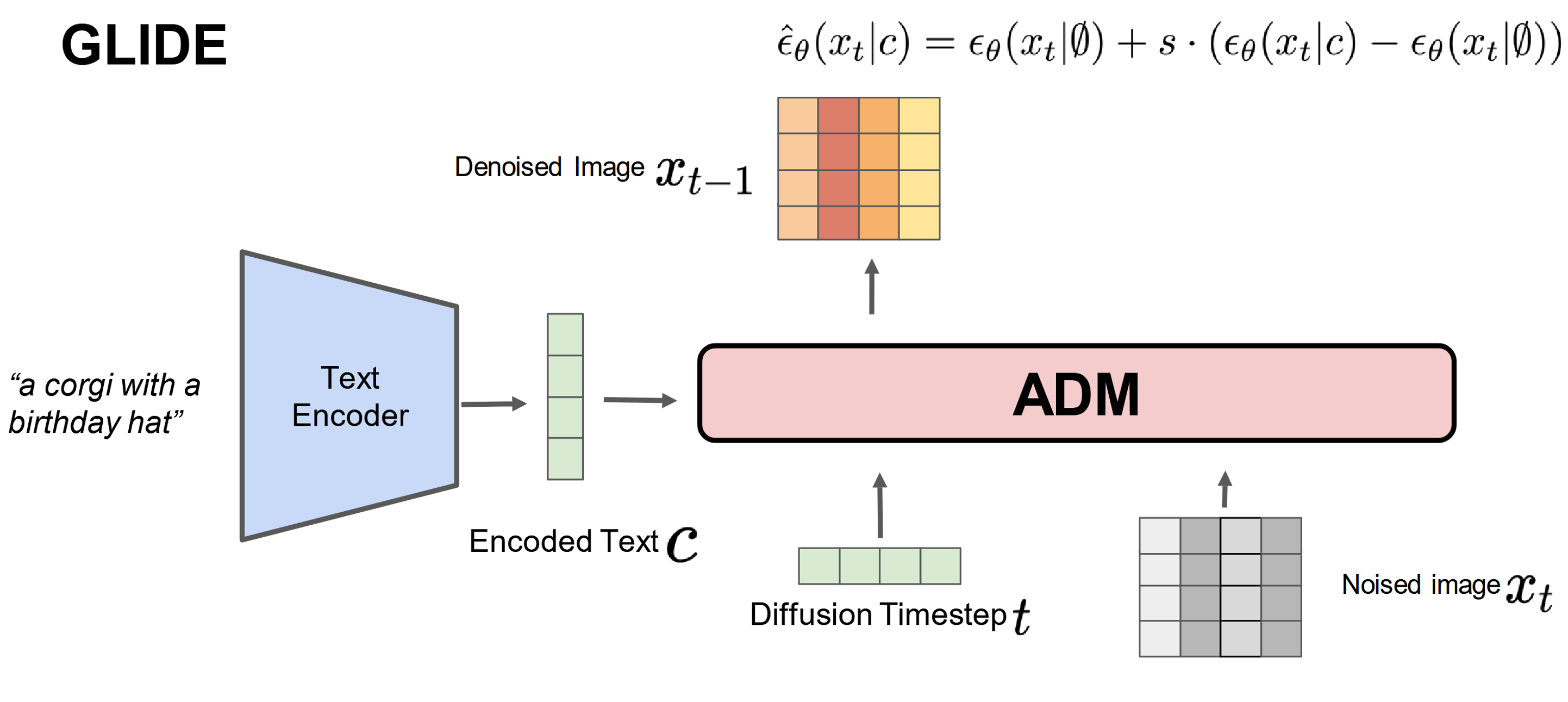

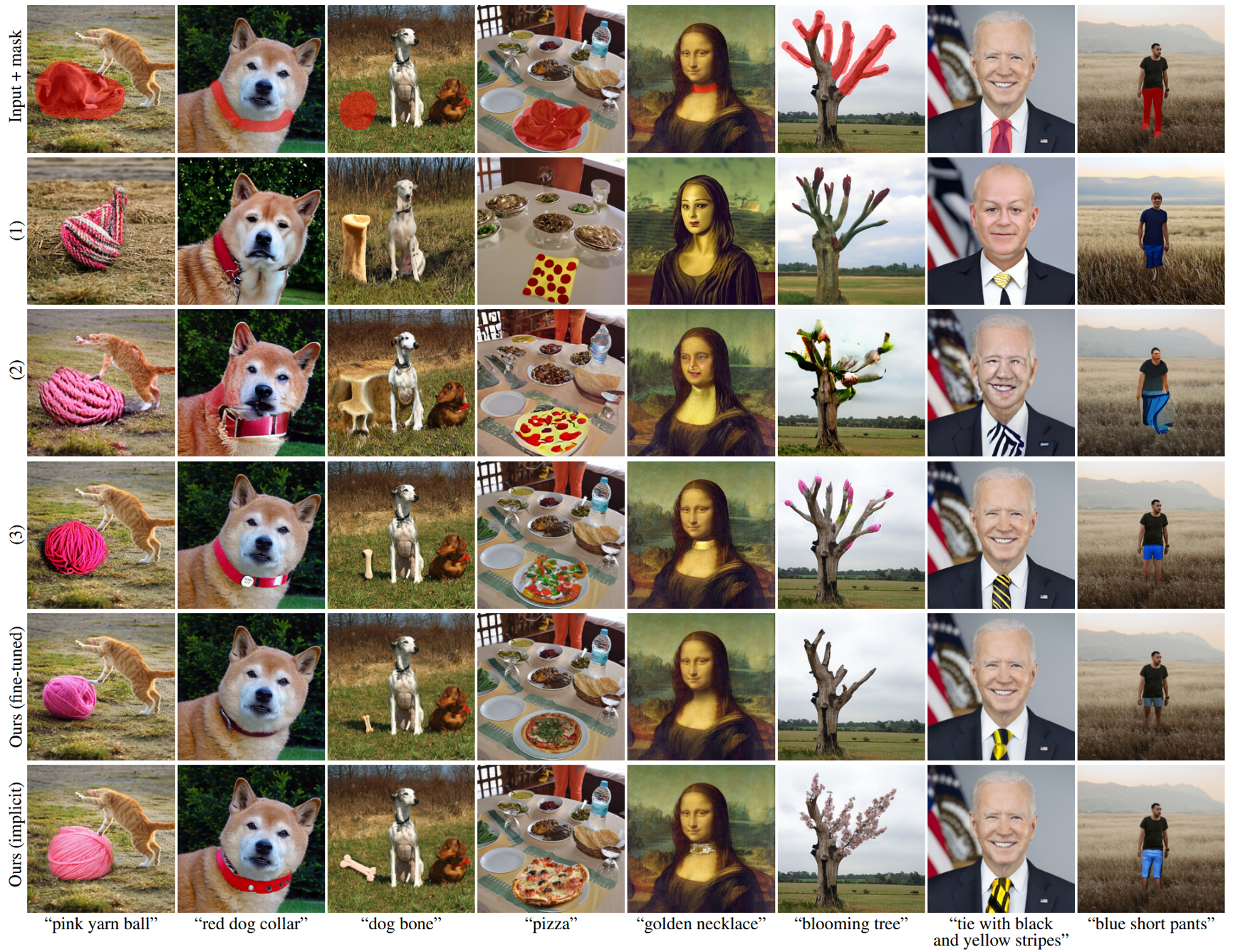

OpenAI – GLIDE (Nichol et al., 2022)

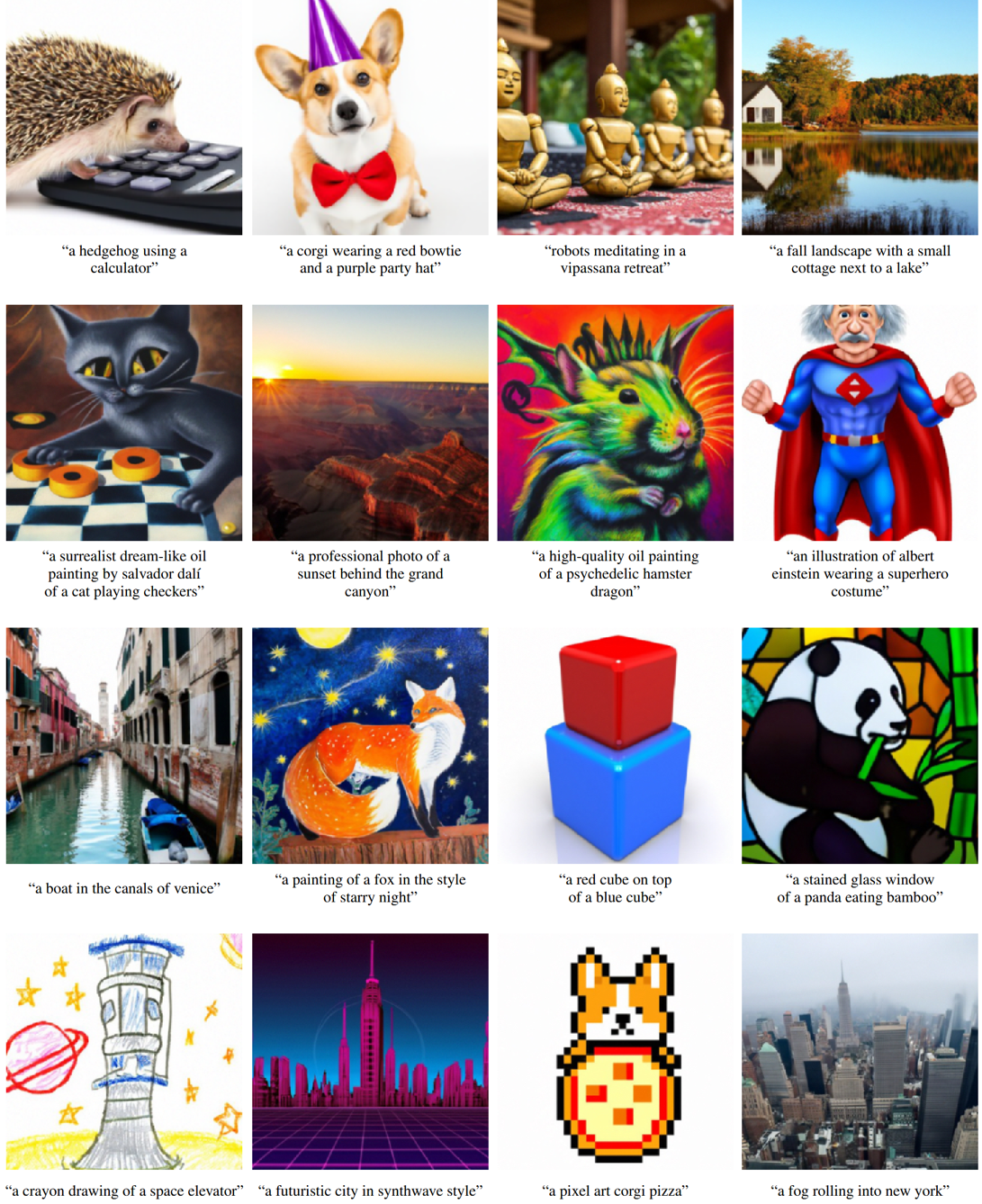

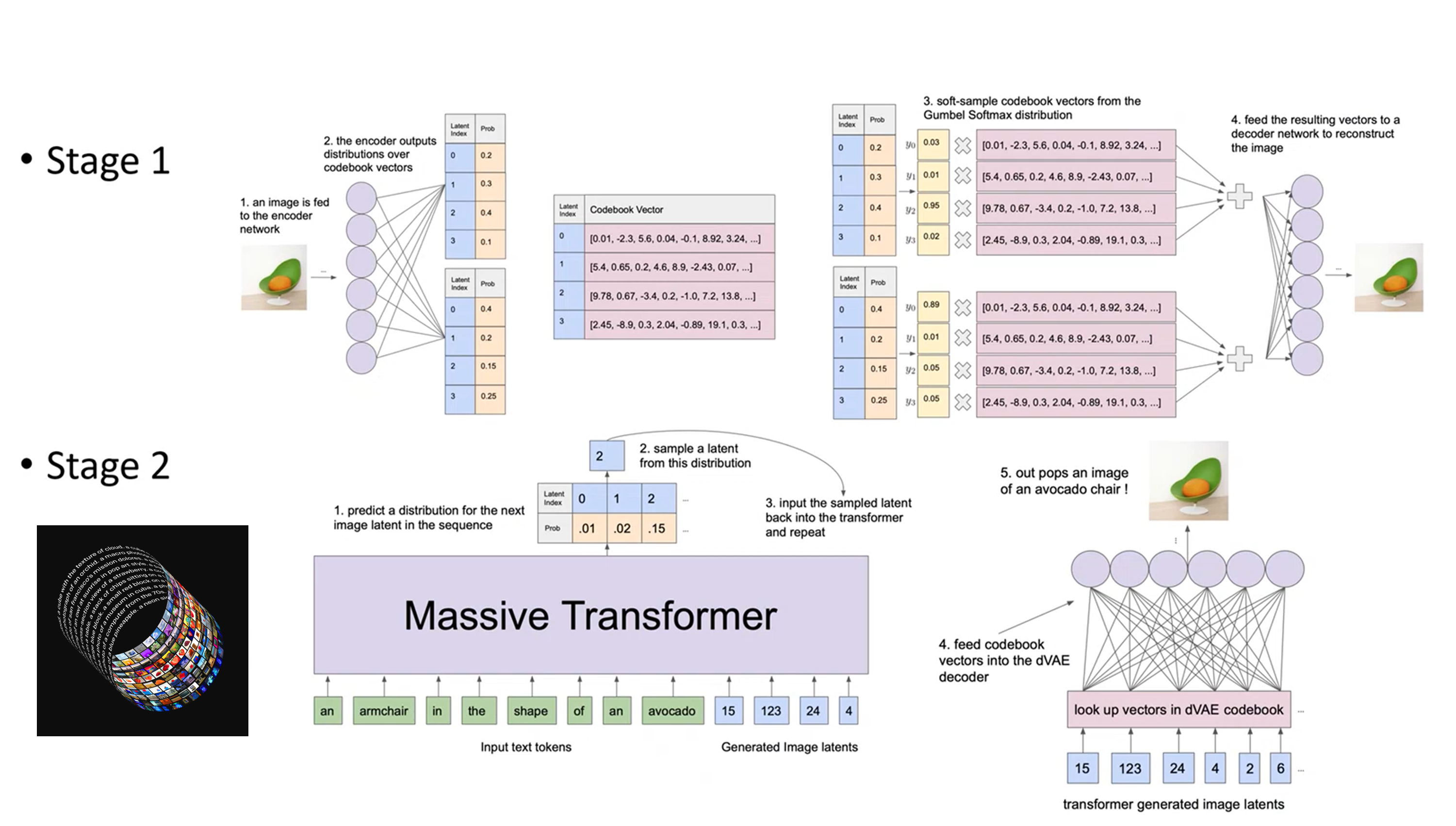

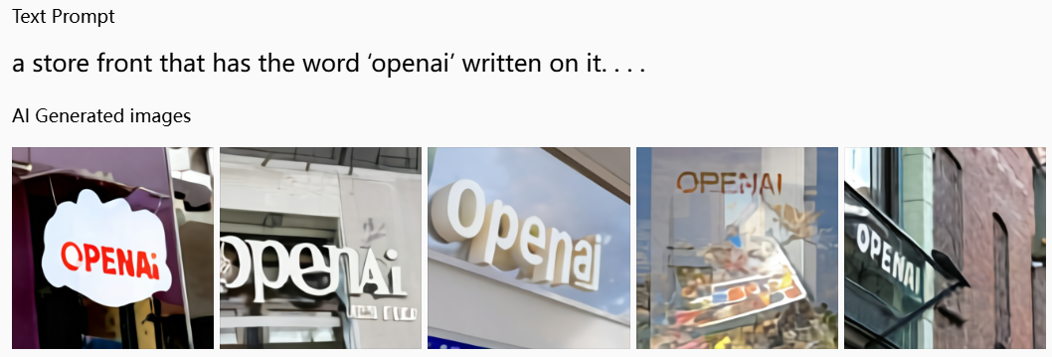

DALL-E (Ramesh et al., 2021)

This slide is derived from https://www.youtube.com/watch?v=oENCNi4JxPY.

Image Credit: https://openai.com/index/dall-e/.

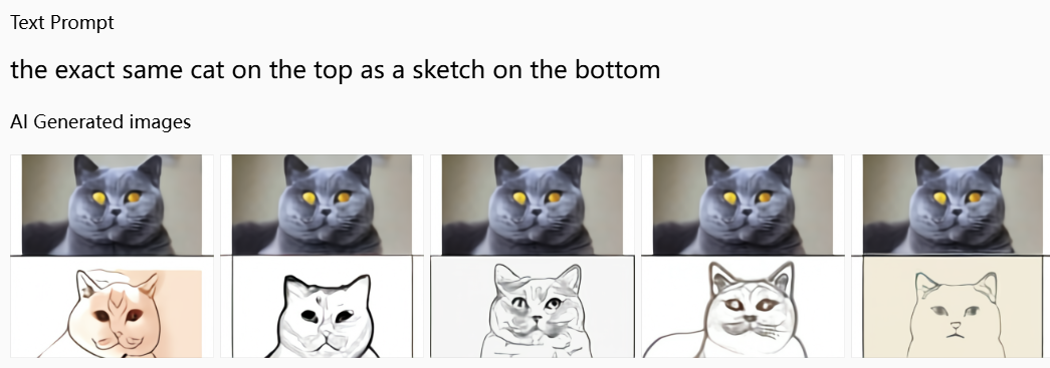

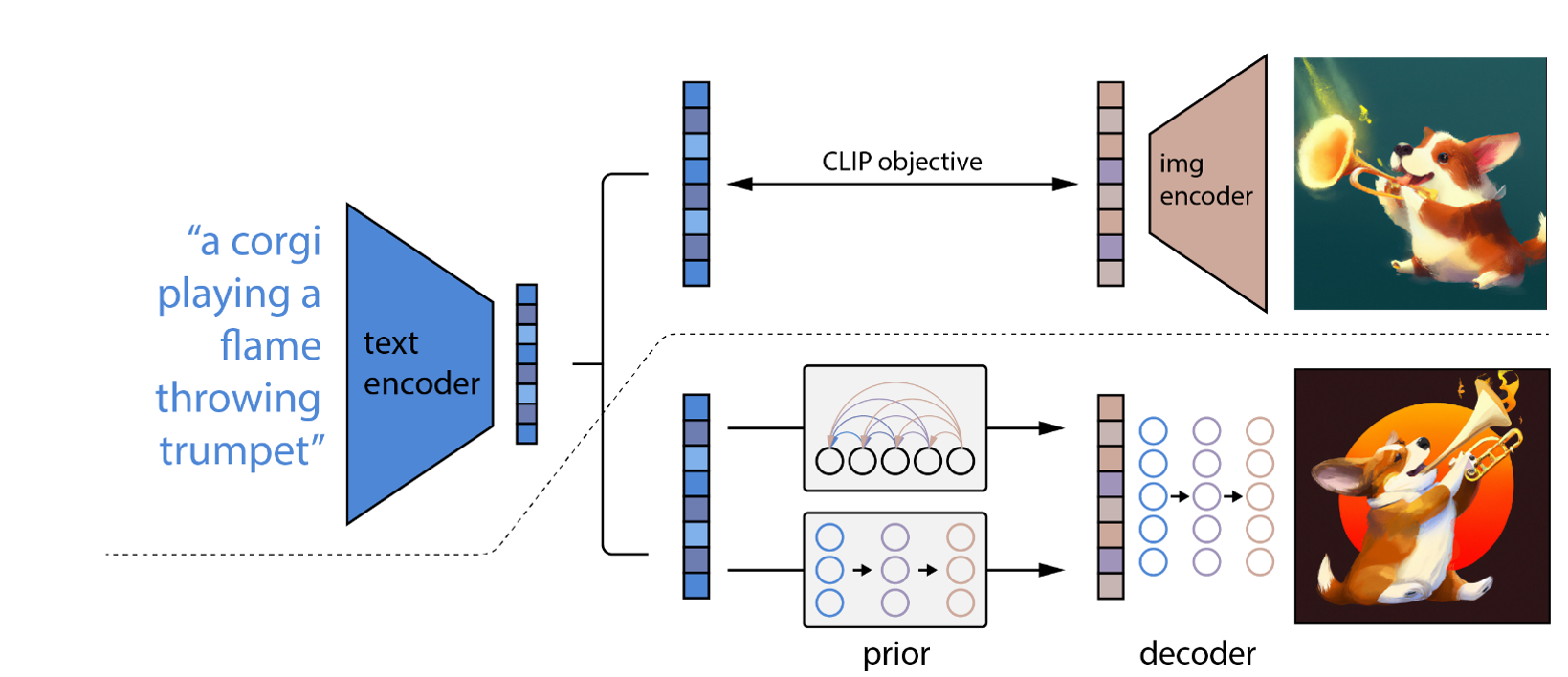

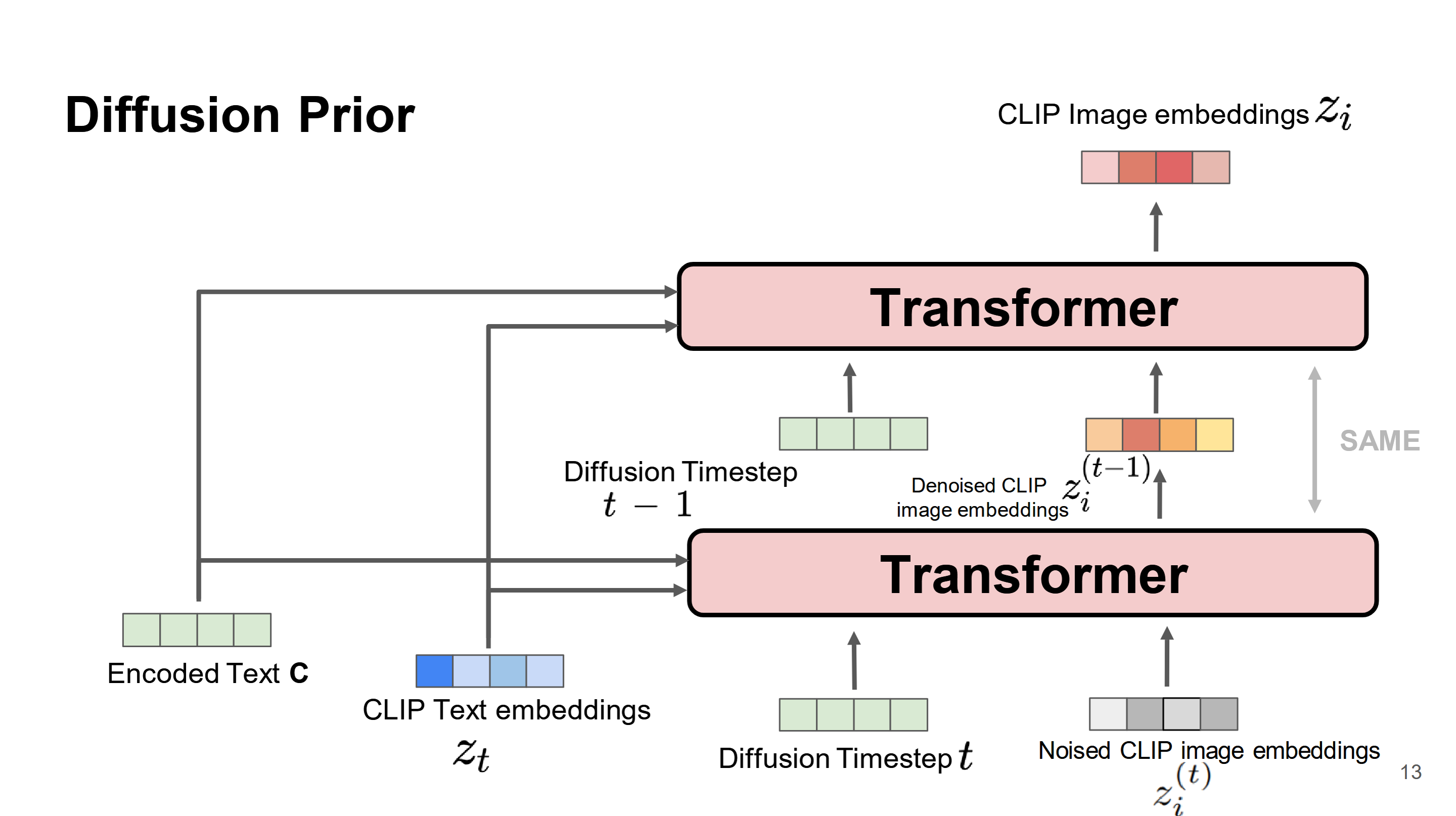

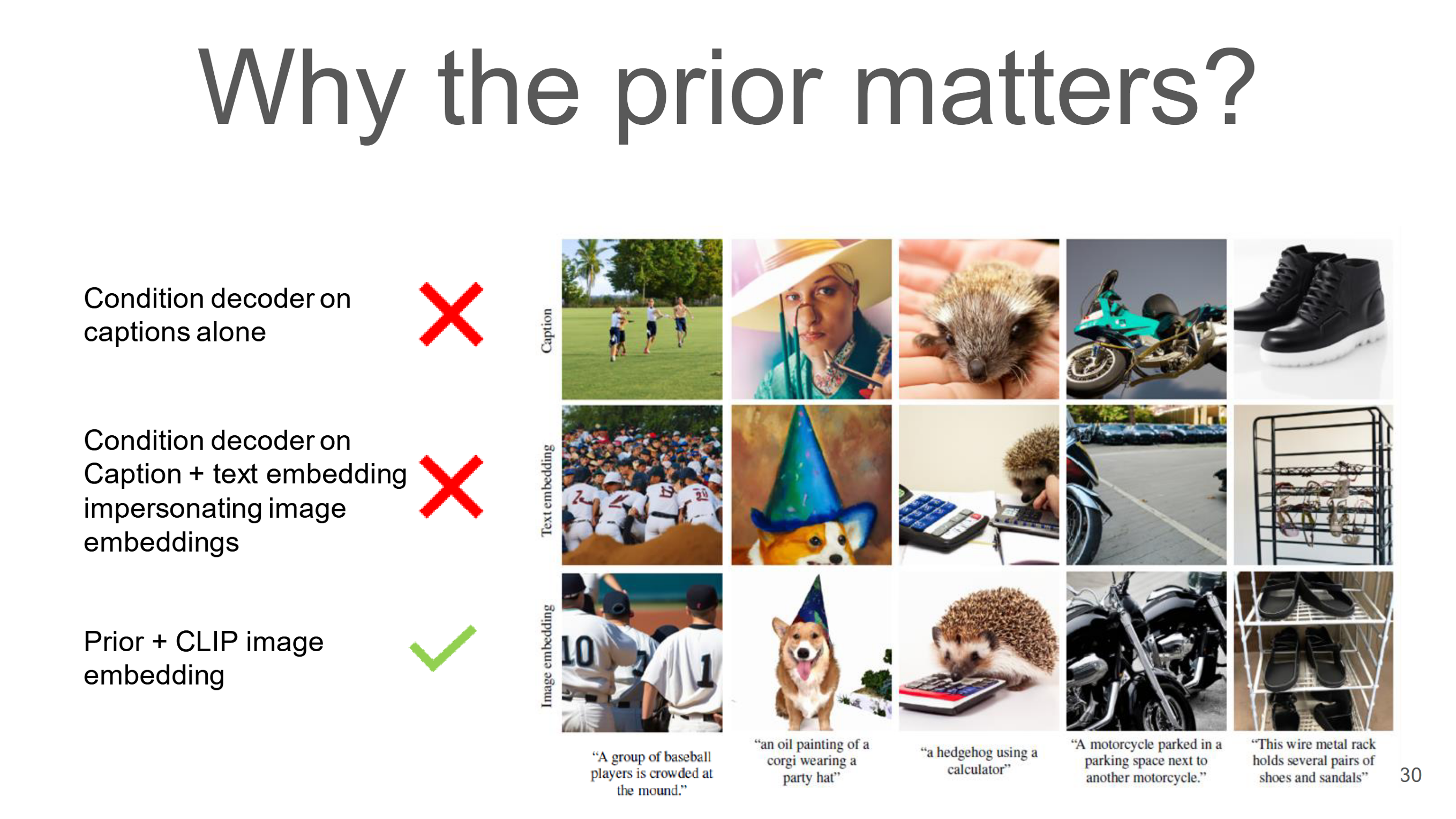

OpenAI – unCLIP/DALL-E 2 (Ramesh et al., 2022)

Image Credit: https://openai.com/index/dall-e/.

This slide is derived from https://www.crcv.ucf.edu/wp-content/uploads/2018/11/Group-6-Paper-2-Dalle-2.pdf.

This slide is derived from https://www.crcv.ucf.edu/wp-content/uploads/2018/11/Group-6-Paper-2-Dalle-2.pdf.

OpenAI – DALL-E 3 (Betker et al., 2023)

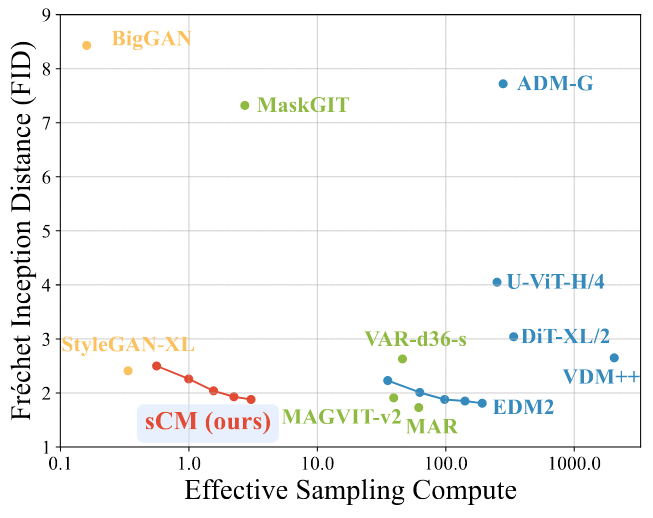

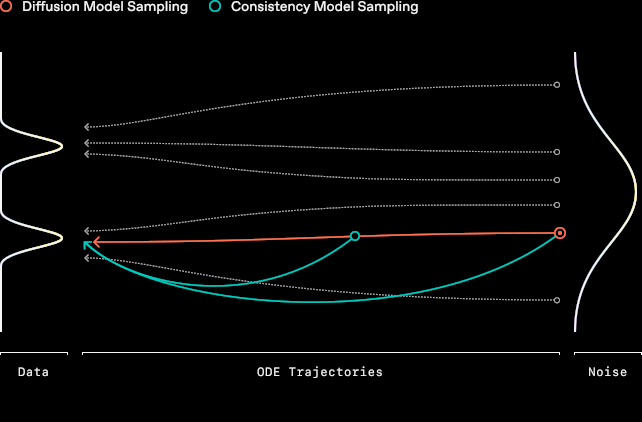

OpenAI – Consistency Training & Consistency Distillation (Song et al., 2023)

Source: Lu & Song, ICLR 2025.

References

- Chen, M., Radford, A., Child, R., Wu, J., Jun, H., Luan, D., & Sutskever, I. (2020). Generative Pretraining From Pixels. Proceedings of the 37th International Conference on Machine Learning (ICML 2020) (pp. 1691--1703).

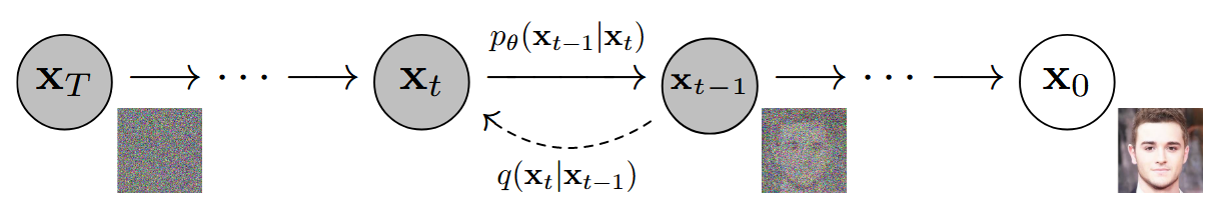

- Ho, J., Jain, A., & Abbeel, P. (2020). Denoising Diffusion Probabilistic Models. Advances in Neural Information Processing Systems 33 (NeurIPS 2020) (pp. 6840--6851).

- Song, Y., & Ermon, S. (19). Generative Modeling by Estimating Gradients of the Data Distribution. Advances in Neural Information Processing Systems 33 (NeurIPS 2019).

- Song, Y., Sohl-Dickstein, J., Kingma, D. P., Kumar, A., Ermon, S., & Poole, B. (2021). Score-Based Generative Modeling through Stochastic Differential Equations. Proceedings of the 9th International Conference on Learning Representations (ICLR 2021).

- Nichol, A., & Dhariwal, P. (2021). Improved Denoising Diffusion Probabilistic Models. Proceedings of the 38th International Conference on Machine Learning (ICML 2021) (pp. 8162--8171).

- Ramesh, A., Pavlov, M., Goh, G., Gray, S., Voss, C., Radford, A., Chen, M., & Sutskever, I. (2021). Zero-Shot Text-to-Image Generation. Proceedings of the 38th International Conference on Machine Learning (ICML 2021) (pp. 8821--8831).

- Nichol, A., Dhariwal, P., Ramesh, A., Shyam, P., Mishkin, P., McGrew, B., Sutskever, I., & Chen, M. (2021). GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. arXiv preprint arXiv:2112.10741.

- Ramesh, A., Nichol, A., Chu, M., & others (2022). Hierarchical Text-Conditional Image Generation with CLIP Latents. arXiv preprint arXiv:2204.06125.

- Karras, T., Aittala, M., Aila, T., & Laine, S. (2022). Elucidating the Design Space of Diffusion-Based Generative Models. Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS 2022).

- Song, Y., Dhariwal, P., Chen, M., & Sutskever, I. (2023). Consistency Models. Proceedings of the 40th International Conference on Machine Learning (ICML 2023).

- OpenAI. (2023). DALL·E 3. https://openai.com/dall-e-3.

- Song, Y., & Dhariwal, P. (2024). Improved Techniques for Training Consistency Models. ICLR.

- Lu, C., & Song, Y. (2025). Simplifying, Stabilizing and Scaling Continuous-Time Consistency Models. ICLR 2025.

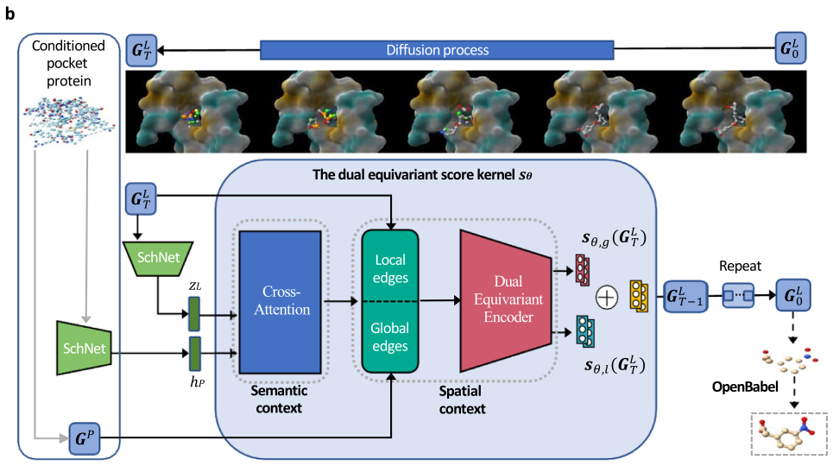

- Huang et al. (2024). A Dual Diffusion Model Enables 3D Molecule Generation and Lead Optimization Based on Target Pockets. NC.

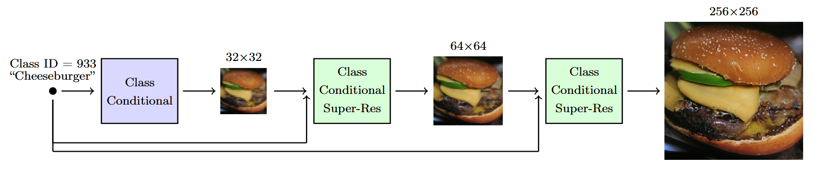

- Ho et al. (2022). Cascaded Diffusion Models for High Fidelity Image Generation. JMLR.

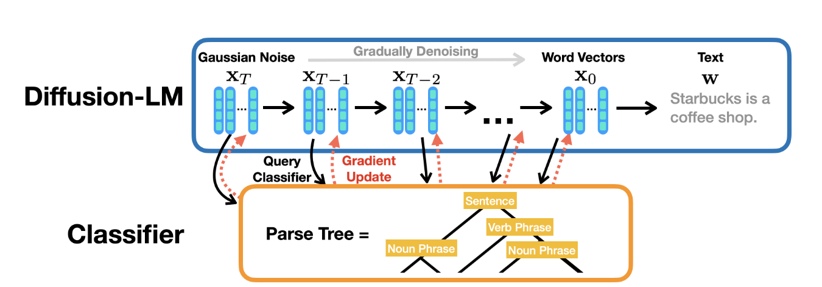

- Li et al. (2022). Diffusion-LM Improves Controllable Text Generation. NeurIPS.

- Kong et al. (2020). DiffWave: A Versatile Diffusion Model for Audio Synthesis. ICLR.

Contact: bili_sakura@zju.edu.cn

© 2024 Sakura